Trapping Hackers with Containerized Deception

TL;DR

This article explores modern honeypots that leverage containerization by walking through the design of a high-interaction honeypot that can use arbitrary Docker containers to lure malicious attacks.

Introduction

While honeypots have been around for a very long time, this article will attempt to provide a fresh look at how containerization has affected the way we use honeypots in containerized environments today. Admittedly, I haven’t explored this topic since 2005. So, while researching for something to implement that would be equally valuable and interesting, I ran into at least half a dozen false starts. I assumed that, like every other area of computing, advanced honeypot systems would abound in the open-source community. But, I suppose I underestimated both the esoteric nature of the subject, as well as its well guarded commercial viability.

A lot has changed since 2005, but a lot has remained the same. A honeypot is not a complicated concept; it’s a system or service that intentionally exposes itself to attackers so that they can be detected as somebody tries to break in. Different than an intrusion detection system, a honeypot can be something as simple as a few lines of code that disguises itself to be a vulnerable open port on a system, or it can be something as advanced as a full-blown operating system with a secret logging system that analyzes patterns of behavior.

However, as developers and systems experts incorporate containerization into their designs, many of the traditional approaches to using honeypots become far less effective. In 2005, deploying a honeypot was often done once, usually placed somewhere easily accessible to the rest of the network. But with containerized systems, because of their isolation from networks and other services, deploying a honeypot, in the same way, becomes useless.

While developers are technically savvy, it is often the case that they aren’t security literate. Even if they are, it’s common to prioritize convenience. This makes securing Docker an even more critical part of a security minded DevOps professional. The same could be said for overburdened systems administrators that take a deemphasized approach to security in an effort to get the job done quickly.

Much of the problem stems from the fact that developers often have a very high level of access to computing resources within the company. Systems administrators, by necessity, have an even higher level of access than developers. Not only do they have access to all the source code in the system, along with the ability to test databases, but in many cases, especially in DevOps environments, they may even have access to production systems. In addition, developers need to perform many tasks for testing purposes that look suspicious to security software, providing cause to disable security software. This gives malicious software and attackers an even easier target to infect system hardware.

In the case of running Docker on a local windows environment, as many developers do for development purposes, it can’t be trusted that all development systems will adhere to proper security configurations. In my case, I have often enabled the Docker remote API on a host for testing purposes and left it enabled, either out of forgetfulness or because of convenience.

Overview of Deception Systems

Honeypots

Honeypots can be deployed alongside different types of systems in your network. They are decoys designed to lure attackers and malicious software so that the source can be detected, logged, and tracked. There are various types of honeypot. High interaction honey pots are designed to run as a service and is meant to be complex enough to fool a system into believing it is a full-featured operating system or device. Mid interaction honeypots emulate certain aspects of an application layer without being too complex and therefore making it more difficult to be easily compromised. Low interaction honeypots, which is what we will be discussing in this article, are easy to deploy and maintain, while serving as a simple early warning system to prevent infection of more critical systems in the environment.

Honeynets

A honeynet is a collection of honeypots designed to strategically track the methods and techniques of malicious software and attackers. This approach allows administrators to watch hackers and malicious code exploit the various vulnerabilities of the system, and can be used either in production or for research purposes to discover new vulnerabilities and attacks.

Basic Use Case

Honeypots have the advantage of not requiring detailed knowledge about network attack methodology. This is especially true of low interaction honeypots, which are relatively simple applications which sit on a port and listen, often imitating very little of the original service. They log access attempts and do little else. Such data collection can be invaluable when collecting certain types of access information, or to serve as an early warning of a compromised service, before there are any serious problems.

Background

Vulnerabilities

Using containers as honeypots have been the source of some debate, as containerization technology is both comparably immature compared to full virtualization. It can also be easy for some administrators to overlook potential configuration issues, such as the ones that follow.

Docker Engine API

An API is a programming interface for applications. It is a type of protocol; a ruleset; sometimes with abstraction, but regardless of implementation, it is always a standard method for programs of different types to talk to one another. REST stands for “Representational State Transfer”. It is a standard which developers can use in order to get and exchange information with other applications, sending a request for information in the form of a specific URL, while receiving data in the body of the return response.

The Docker Engine API is used by the Docker CLI to manage objects. Although a UNIX socket (unix:///var/run/docker.sock)is enabled by default on Linux systems, a TCP socket (tcp://127.0.0.1:2376) is enabled by default on Windows systems. On Linux systems, for development and automation purposes, this API can also be accessed directly by remote applications as a REST API by enabling it to be used by a TCP socket.

Base Images

Most of the vulnerabilities found today are in the base images themselves. In the case of Docker, the base image is composed of an operating system, often customized from another popular image found on Docker Hub. Customizations, malicious or accidental, along with the original base image, creates plenty of opportunities for attacks, sometimes even by the most novice of hackers.

Docker Hub

While Docker Hub has removed malicious Docker containers in the past, as a community repository, it is wide open to abuse and attack. Base images uploaded to Docker Hub should be used with caution. But given that many administrators decide that the convenience often outweighs the risk, taking prudent security measures to mitigate the risk of attack would be warranted.

Notable Honeypot Systems

There are a number of honeypot systems in popular use, however, very few specifically target or uniquely benefit the use of containerization. The two following open source packages are notable exceptions.

Modern Honey Network

MHN has a lot of potential for container integration, yet it is not officially supported to run Docker. As a comprehensive honeynet management system, honeypots could be easily deployed as part of a K8s configuration with a little effort. The honeypot data collected from internal network sources, in particular, could be invaluable to securing a distributed container environment.

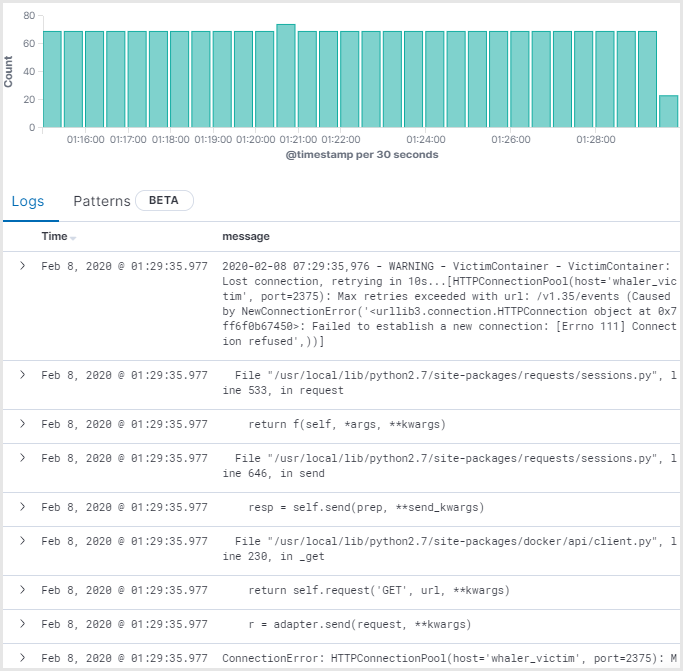

Oncyberblog’s Whaler: A Docker API Honeypot

Over a dozen GitHub repositories bare the name Whaler, yet this one by Oncyberblog was actually a projected contender for this article. This project is unique because it attempts to lure attackers using an exposed Docker Engine API. It does have its limitations, however. First, because it is running an embedded Docker container, Docker must be run in privileged mode on its host. This is a problem because its an enormous security risk that could jeopardize the security of the host. Therefore, it would be necessary to take precautions by installing it on an isolated and secured host. The system requirements aren’t demanding, so the host doesn’t need to be of any substantial size. However, since you would need to monitor individual containers on the primary host, you would need a method of linking the segregated honeypot host to the application host in which the monitored application containers would be located.

System Overview

This project makes quick use of old-school Linux internals to handle container orchestration, load balancing, and security. For more information, source code, and updates, see DockerTrap, the companion GitHub repository created for this article.

System configuration

Change default port of SSH on the host

Before doing anything else, change the default port from 22 to something else, like 2222. The system will be luring attackers using port 22, so this port should be freed of use.

Install Docker

sudo apt -y install apt-transport-https ca-certificates curl software-properties-common

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu bionic stable"

sudo apt update

apt-cache policy docker-ce

sudo apt -y install docker-ce

Install supporting system tools

sudo apt update

sudo apt -y install socat xinetd auditd netcat-openbsd

Configure xinetd

This system makes use of the following bash script, managed by xinetd, to spin up containers whenever an incoming connection is requested by port 22.

The following bash script should be made available to xinetd as /usr/bin/honeypot with 755 permissions and root ownership.

The EXT_IFACE variable should be changed to the interface that corresponds with the device you wish to receive incoming ssh connections on port 22.

#!/bin/bash

EXT_IFACE=ens4

MEM_LIMIT=128M

SERVICE=22

QUOTA_IN=5242880

QUOTA_OUT=1310720

REMOTE_HOST=`echo ${REMOTE_HOST} | grep -o '[0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}'`

{

CONTAINER_NAME="honeypot-${REMOTE_HOST}"

HOSTNAME=$(/bin/hostname)

# check if the container exists

if ! /usr/bin/docker inspect "${CONTAINER_NAME}" &> /dev/null; then

# create new container

CONTAINER_ID=$(/usr/bin/docker run --name ${CONTAINER_NAME} -h ${HOSTNAME} -e "REMOTE_HOST=${REMOTE_HOST}" -m ${MEM_LIMIT} -d -i honeypot ) ##/sbin/init)

CONTAINER_IP=$(/usr/bin/docker inspect --format '{{ .NetworkSettings.IPAddress }}' ${CONTAINER_ID})

PROCESS_ID=$(/usr/bin/docker inspect --format '{{ .State.Pid }}' ${CONTAINER_ID})

# drop all inbound and outbound traffic by default

/usr/bin/nsenter --target ${PROCESS_ID} -n /sbin/iptables -P INPUT DROP

/usr/bin/nsenter --target ${PROCESS_ID} -n /sbin/iptables -P OUTPUT DROP

# allow access to the service regardless of the quota

/usr/bin/nsenter --target ${PROCESS_ID} -n /sbin/iptables -A INPUT -p tcp -m tcp --dport ${SERVICE} -j ACCEPT

/usr/bin/nsenter --target ${PROCESS_ID} -n /sbin/iptables -A INPUT -m quota --quota ${QUOTA_IN} -j ACCEPT

# allow related outbound access limited by the quota

/usr/bin/nsenter --target ${PROCESS_ID} -n /sbin/iptables -A OUTPUT -p tcp --sport ${SERVICE} -m state --state ESTABLISHED,RELATED -m quota --quota ${QUOTA_OUT} -j ACCEPT

# enable the host to connect to rsyslog on the host

/usr/bin/nsenter --target ${PROCESS_ID} -n /sbin/iptables -A OUTPUT -p tcp -m tcp --dst 172.17.0.1 --dport 514 -j ACCEPT

# add iptables redirection rule

/sbin/iptables -t nat -A PREROUTING -i ${EXT_IFACE} -s ${REMOTE_HOST} -p tcp -m tcp --dport ${SERVICE} -j DNAT --to-destination ${CONTAINER_IP}

/sbin/iptables -t nat -A POSTROUTING -j MASQUERADE

else

# start container if exited and grab the cid

/usr/bin/docker start "${CONTAINER_NAME}" &> /dev/null

CONTAINER_ID=$(/usr/bin/docker inspect --format '{{ .Id }}' "${CONTAINER_NAME}")

CONTAINER_IP=$(/usr/bin/docker inspect --format '{{ .NetworkSettings.IPAddress }}' ${CONTAINER_ID})

# add iptables redirection rule

/sbin/iptables -t nat -A PREROUTING -i ${EXT_IFACE} -s ${REMOTE_HOST} -p tcp -m tcp --dport ${SERVICE} -j DNAT --to-destination ${CONTAINER_IP}

/sbin/iptables -t nat -A POSTROUTING -j MASQUERADE

fi

echo ${CONTAINER_IP}

} &> /dev/null

# forward traffic to the container

exec /usr/bin/socat stdin tcp:${CONTAINER_IP}:22,retry=60

The following service file should be created as /etc/xinetd.d/honeypot:

# Container launcher for an SSH honeypot

service honeypot

{

disable = no

instances = UNLIMITED

server = /usr/bin/honeypot

socket_type = stream

protocol = tcp

port = 22

user = root

wait = no

log_type = SYSLOG authpriv info

log_on_success = HOST PID

log_on_failure = HOST

}

Then, /etc/services should be updated to include the following to reflect the new ssh port and the honeypot port at port 22

ssh 2222/tcp

honeypot 22/tcp

Configure crond

To handle stopping and cleaning up old containers, the following bash script should be deployed to /usr/bin/honeypot.clean with 755 permissions and root ownership.

#!/bin/bash

EXT_IFACE=ens4

SERVICE=22

HOSTNAME=$(/bin/hostname)

LIFETIME=$((3600 * 6)) # Six hours

datediff () {

d1=$(/bin/date -d "$1" +%s)

d2=$(/bin/date -d "$2" +%s)

echo $((d1 - d2))

}

for CONTAINER_ID in $(/usr/bin/docker ps -a --no-trunc | grep "honeypot-" | cut -f1 -d" "); do

STARTED=$(/usr/bin/docker inspect --format '{{ .State.StartedAt }}' ${CONTAINER_ID})

RUNTIME=$(datediff now "${STARTED}")

if [[ "${RUNTIME}" -gt "${LIFETIME}" ]]; then

logger -p local3.info "Stopping honeypot container ${CONTAINER_ID}"

/usr/bin/docker stop $CONTAINER_ID

fi

RUNNING=$(/usr/bin/docker inspect --format '{{ .State.Running }}' ${CONTAINER_ID})

if [[ "$RUNNING" != "true" ]]; then

# delete iptables rule

CONTAINER_IP=$(/usr/bin/docker inspect --format '{{ .NetworkSettings.IPAddress }}' ${CONTAINER_ID})

REMOTE_HOST=$(/usr/bin/docker inspect --format '{{ .Name }}' ${CONTAINER_ID} | cut -f2 -d-)

/sbin/iptables -t nat -D PREROUTING -i ${EXT_IFACE} -s ${REMOTE_HOST} -p tcp --dport ${SERVICE} -j DNAT --to-destination ${CONTAINER_IP}

logger -p local3.info "Removing honeypot container ${CONTAINER_ID}"

/usr/bin/docker rm $CONTAINER_ID

fi

done

By default, the above script is set to run every 5 minutes by appending the following to /etc/crontab.

*/5 * * * * /usr/bin/honeypot.clean

Configure auditd

Enable logging the execve systemcall in auditd by adding the following audit rules:

auditctl -a exit,always -F arch=b64 -S execve

auditctl -a exit,always -F arch=b32 -S execve

Deploy apitrap.sh

The apitrap.sh script is an optional component that makes an attempt to simulate a Docker API on the host. Since this is a bash script, it is recommended that it is run from a Docker container, run as an unprivileged user, and redirected to port 2375 or 2376 in order to avoid potential exploits.

#!/bin/bash

## Docker API heading

H1="HTTP/1.1 404 Not Found\n"

H2="Content-Type: application/json\n"

H3="Date: "`date '+%a, %d %b %Y %T %Z'`"\n"

H4="Content-Length: 29\n\n"

## API error message

B1="{\"message\":\"page not found\"}\n"

HEADERS+=$H1$H2$H3$H4

## Default to port 2376 if no port is given

if ! test -z "$1"; then

PORT=$1;

else

PORT=2376;

fi

QUEUE_FILE=/tmp/apitrap

test -p $QUEUE_FILE && rm $QUEUE_FILE

mkfifo $QUEUE_FILE

while true; do

cat "$QUEUE_FILE" | nc -l "$PORT" | while read -r line || [[ -n "$line" ]]; do

if echo $line | grep -q 'GET \|HEAD \|POST \|PUT \|DELETE \|CONNECT \|OPTIONS \|TRACE'; then

echo ">>> ["$(date)"] <<<"

echo : $line

echo -e $HEADERS$B1 > $QUEUE_FILE

fi

done

done

You would need to make appropriate changes to the system, but from inside any honeypot you decide to deploy, it should be possible to attain the host IP from inside a docker container. For example:

root@dockerhost:~# hostname -I |awk '{ print $2 }'

172.17.0.1

root@dockerhost:~# docker run -it honeypot-test /sbin/ip route|awk '/default/ { print $3 }'

172.17.0.1

root@dockerhost:~#

We show that the hostname for dockerhost is 172.17.0.1. Retrieving the Docker container’s gateway address reveals that it is also 172.17.0.1. This quite easily makes the host IP available for attack from the container. We should therefore, exploit this for our project by making sure the configuration for DockerTrap is redirected and available on the same host. However, those details will not be discussed here. Look for updates on the DockerTrap GitHub repository.

Build honeypot image from Dockerfile

The main feature of this image is that sshd is enabled, root login is enabled, and the password for root is set to root. You should modify this to include other user accounts, along with trivial passwords that will help lure more attacks.

FROM alpine:3.9

ENTRYPOINT ["/entrypoint.sh"]

EXPOSE 22

## Root is gloriously unsecure!

RUN apk add --no-cache openssh \

&& sed -i s/#PermitRootLogin.\*/PermitRootLogin\ yes/ /etc/ssh/sshd_config \

&& echo "root:root" | chpasswd

RUN echo -e '#!/bin/ash\n\nssh-keygen -A\n/usr/sbin/sshd -D -e "$@"' > /entrypoint.sh

RUN chmod 555 /entrypoint.sh

Commit final honeypot image

The system looks for a base image committed as honeypot:latest. As an ssh connection is made, the system automatically creates a unique instance of this image with the honeypot- prefix. Modify this image as needed.

Testing

SSH into honeypot

Each of the dynamically created honeypots will adopt the hostname of the host. At this point, if any socket connection is established on port 22 of localhost (or any other network adapter on the host that is configured to be used by DockerTrap), it will be redirected to the sshd daemon of the honeypot inside one of the honeypot containers. The Bash script, /usr/bin/honeypot triggered by xinetd makes sure that each IP address is directed to their corisponding container, so if an attacker attempts to log in from the same IP address a second, third, or fourth time, they will log into the same container each time.

dockertrap:~# ssh root@localhost -p 22

The authenticity of host 'localhost (127.0.0.1)' can't be established.

ECDSA key fingerprint is SHA256:dY6EIpV1nBw5143TkgPQU5SRWIkrxkZCiLWd+ktiNKE.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'localhost' (ECDSA) to the list of known hosts.

root@localhost's password:

Welcome to Alpine!

The Alpine Wiki contains a large amount of how-to guides and general

information about administrating Alpine systems.

See <http://wiki.alpinelinux.org/>.

You can setup the system with the command: setup-alpine

You may change this message by editing /etc/motd.

dockertrap:~# ifconfig -a

eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:02

inet addr:172.17.0.2 Bcast:172.17.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:124 errors:0 dropped:0 overruns:0 frame:0

TX packets:96 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:13954 (13.6 KiB) TX bytes:14026 (13.6 KiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

dockertrap:~# exit

Connection to localhost closed.

root@dockertrap:~#

Note that the ethernet device eth0 above is configured with an IP address of 172.17.0.2 and a MAC address of 02:42:AC:11:00:02. When logging in from an IP address other than the host’s localhost, such as my hope PC, DockerTrap will spawn a new, yet nearly identical container for me to log into (except for the IP and MAC address, of course).

C:\Users\user>ssh [email protected] -p 22

The authenticity of host '35.238.100.5 (35.238.100.5)' can't be established.

ECDSA key fingerprint is SHA256:VKG+5VhB0WL5ncPomfmb+XW484LtjS8oAs+BDM07sJQ.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '35.238.100.5' (ECDSA) to the list of known hosts.

[email protected]'s password:

Welcome to Alpine!

The Alpine Wiki contains a large amount of how-to guides and general

information about administrating Alpine systems.

See <http://wiki.alpinelinux.org/>.

You can setup the system with the command: setup-alpine

You may change this message by editing /etc/motd.

honeypot2:~# ifconfig -a

eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:09

inet addr:172.17.0.9 Bcast:172.17.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:72 errors:0 dropped:0 overruns:0 frame:0

TX packets:50 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:7593 (7.4 KiB) TX bytes:7041 (6.8 KiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

honeypot2:~# exit

Connection to 35.238.100.5 closed.

C:\Users\user>

The IP address and MAC address are different, yet the hostname is the same. After a while, we will notice that an increasing number of containers begin to spin up on the host, as random bots connect to port 22. It is worth nothing that in order to prevent a memory resource attack, you will want to edit the /etc/xinetd.d/honeypot file so that xinetd limits the number of instances.

root@dockertrap:/usr/bin# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4971d1c8272a honeypot "/entrypoint.sh" 17 minutes ago Up 17 minutes 22/tcp honeypot-175.111.182.186

bf4f9b94ad03 honeypot "/entrypoint.sh" 27 minutes ago Up 27 minutes 22/tcp honeypot-58.96.198.15

e69231243915 honeypot "/entrypoint.sh" 29 minutes ago Up 29 minutes 22/tcp honeypot-

906c4e2be5c7 honeypot "/entrypoint.sh" 30 minutes ago Up 30 minutes 22/tcp honeypot-10.128.0.46

root@dockertrap:/usr/bin#

Future Work

Much of the design of DockerTrap can be applied to Kubernetes. Similar to how Docker enables resource restrictions here, K8s supports advanced security features, including an iptables counterpart network policy. The API honeypot apitrap.sh can also be replaced by a more robust system like Whaler, which would help identify compromised systems that specifically seek out misconfigured Docker hosts.

Event Log

- [02/11/20] Initial Commit v1.0

- [02/11/20] Submitted to appfleet

Photo by

Photo by